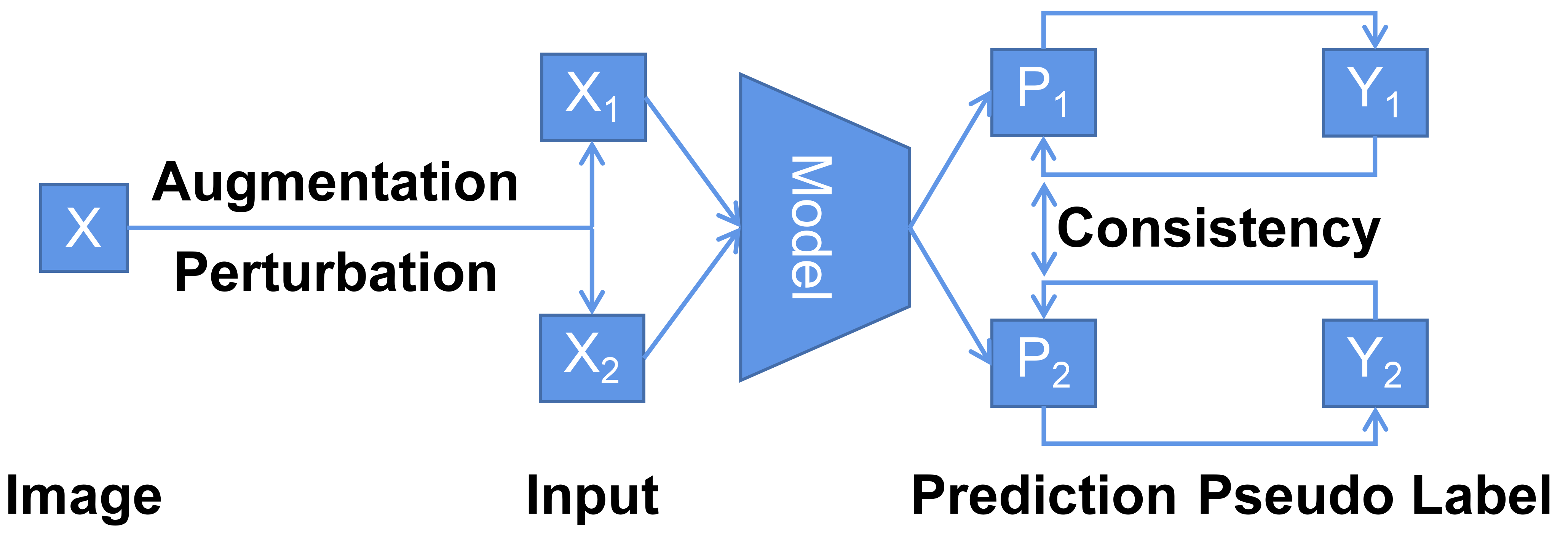

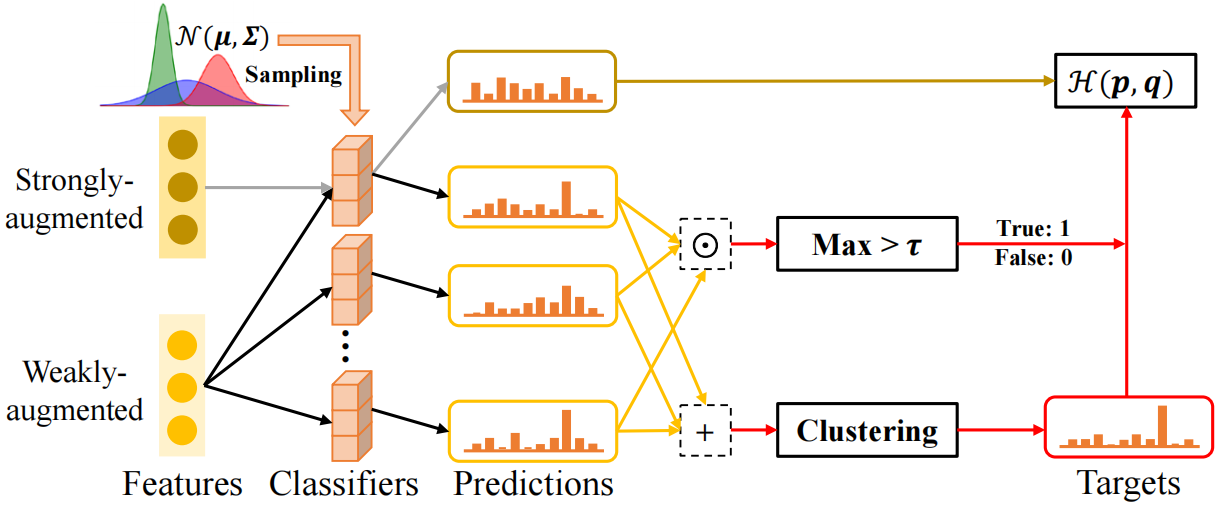

Diagram for our method of stochastic consensus (STOCO). To implement our proposed consistency criterion, we sample multiple classifiers from a learned Gaussian distribution; for the weakly-augmented version of any unlabeled sample, we calculate the element-wise product of category predictions from these stochastic classifiers and select samples with the maximum value in the product higher than a pre-defined threshold $\tau$; we take an average over the predictions from multiple classifiers, and generate pseudo labels from the thus obtained averages via deep discriminative clustering; then, with these derived targets, the model is trained using the strongly-augmented version of selected samples via a cross-entropy loss.